-

26th March 2017, 17:03

#1

Cake!

I'm not sure if TPS will drop when loading chunks takes a bit longer. It might.

As for fixing the issue, that won't take much time. If/when I have the funds it's just a matter of ordering a drive. Then pull the defective drive from the server and replace it with the new one. The raid controller will then start rebuilding the array automatically. After that is done I'll move the virtual machines back to the sas drives. All in all it won't take more than half an hour of button pushing. The rebuilding and virtual machine moving will take longer but that's just a progress bar filling up

-

27th March 2017, 00:22

#2

Administrator

Is there anything I can do to check what may be causing the tps drop?

I used /lag and the entities where around 4000, below the 10k number I have seen on the message.

Also at one point mem had 646 or so remaining.

/lag

now shows

2065 chunks, 990 entities, 126,537 tiles, looks like it reset about 3 hours ago. and is back to 19.87 tps

though the one on the tab screen show a different number of about 4, bukkit tps?

Last edited by Rainnmannx; 27th March 2017 at 01:12.

-

27th March 2017, 11:04

#3

Cake!

/lag shows TPS from the Bukkit side of the server, same as the value shown when pressing TAB. Use /forge tps instead to get a more accurate reading on how the server is doing. Bukkit TPS tends to always be slightly lower than the Forge TPS and less than 20 (19,xx). To me it looks like one sits on top of the other since Forge and Forge mods seem to take precedence to Bukkit and Bukkit plugins.

The TAB TPS being low was due to a glitch in BungeeCord and the plugin that takes care of that information. I've restarted BungeeCord and now the 'Bukkit TPS' in the TAB screen is similar to that when you type /lag or /tps

Bottom pic shows output of:

/lag

/tps

/forge tps

-

27th March 2017, 11:49

#4

Cake!

I've received three donations last night. Thanks guys!

With this I'm going to purchase two new drives. Unfortunately the drives I linked above won't ship to The Netherlands. Buying them here (European Amazon) they are 89.98 euro. Which means to avoid an angry wife we could really use another donation or two

The two drives will replace the faulty one and expand the array to give us a net storage of 900GB with more I/O. (Raid-5 capacity = n-1, meaning: 4x300 - 300) The drives should arrive in a few days.

-

30th March 2017, 15:39

#5

Cake!

The new hard drives were delivered today. I put them in the server and, although this is something for which the server can remain on, it went down anyway. Reason being my 2 year old who saw a shiny power button and just had to push it. I still haven't figured out how I can configure ESXi to ignore the power button. So... apologies for the unscheduled down time

The new drives have been put in a raid-5 array and it's currently initializing. We're growing from 600 to 900GB (4x300 - 300) and when that's done I'll start moving virtual machines back to this array. After that server performance should be back to normal.

-

-

Cake!

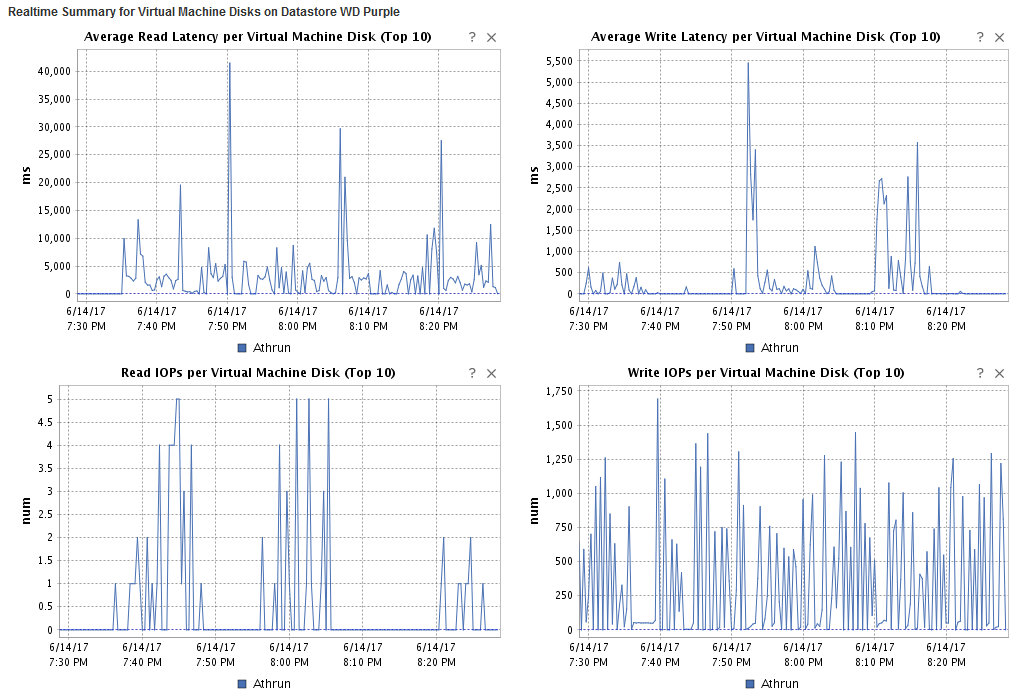

As some of you have noticed the server still feels sluggish from time to time. I think I may have found the culprit. It's Dynmap! We have Dynmap running on our TFC servers, 4 in total. Those generate a ton of updates because of TFC and it's huge amount of block updates.

Take a look at this:

What you see here are statistics for our WD Purple hard drive which is solely used to store Dynmap tiles. It does nothing else. There's no raid, just a single drive. As you can see the system is sending up to 1600 IOPS (input/output operations per second) to that drive. As a rule of thumb a regular hard drive can only do about 100 IOPS.

The reason this is slowing down the rest of the server is that, while it is a single drive, it is connected to the same raid controller as the rest of the hard drives. When a drive can't keep up with the requested amount of IOPS, a bunch of these get queued. The raid controller has a queue depth of 255. When that queue gets saturated, IOPS meant for other raid arrays are put back in line and need to compete on a flooded I/O path.

What I'm going to do is move the WD Purple drive to the onboard SATA controller. Since it doesn't use raid anyway, Dynmap can saturate that SATA controller all it wants to. If I'm right about this we should see lower latency for all other raid volumes meaning everything should run smoother.

I'm probably going to do this somewhere tonight. So if the server's down, that's why

Posting Permissions

Posting Permissions

- You may not post new threads

- You may not post replies

- You may not post attachments

- You may not edit your posts

-

Forum Rules

Reply With Quote

Reply With Quote